DataTongs Walkthrough

How to cast your data with SchemaTongs

How to cast your data with SchemaTongs

This guide provides a walkthrough for using DataTongs to extract existing table data into scripts suitable for source control, allowing future modifications to be made. The primary use case for these is the deployment of seed or lookup data for each release, but it can also be useful for generating scripts that deploy test data for your CI.

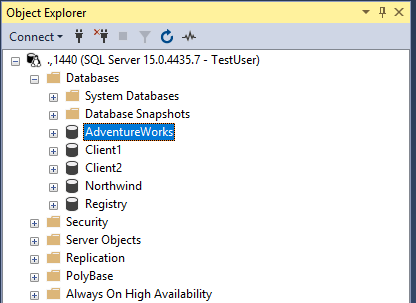

To begin, ensure access to a running Microsoft SQL Server with an existing database from which to extract the schema. Authentication can be configured using either SQL Server Authentication or Windows Authentication, depending on the server setup. This tutorial uses a Docker container running a Linux SQL Server image on a Windows laptop, connecting via SQL Server Authentication.

For this example, we are using the AdventureWorks database populated with the test data supplied by Microsoft.

Install DataTongs and configure the AppSettings.json file with the server, login

details, and the first database to extract data from. Specify the directory for the new product

and the names for the Product and Template. Renaming a template later is possible but cumbersome,

as it affects both the template file and folder, as well as the product file. Below is an example

of the edited AppSettings.json.

{

"Source": {

"Server": ".,1440",

"User": "TestUser",

"Password": "*****************",

"Database": "AdventureWorks"

},

"Tables": {

"Person.Address": "AddressID",

"Person.BusinessEntity": "BusinessEntityID",

"Person.BusinessEntityAddress": "BusinessEntityID,AddressID,AddressTypeID",

"Person.BusinessEntityContact": "BusinessEntityID,PersonID,ContactTypeID",

"Person.EmailAddress": "BusinessEntityID,EmailAddressID",

"Person.PersonPhone": "BusinessEntityID,PhoneNumber,PhoneNumberTypeID",

"Person.StateProvince": "StateProvinceID",

"Production.Location": "LocationID"

},

"TableFilters": {

},

"OutputPath": "C:/Temp/TestData/AdventureWorks",

"ShouldCast": {

"MergeUpdate": true,

"MergeDelete": false

}

}

The Tables section is where you provide the list of tables to extract data from and

the key columns that will be used to order the data and also match it for updating in the generated

MERGE script.

The TableFilters section allows you to optionally provide a table name and filter

expression, used in a WHERE clause, that will filter the data extracted and will also be used

if you opt to include the DELETE section in the merge script. Those entries should be shaped like

the section before with the table name as the key and the filter expression as the value.

The ShouldCast section is where you define whether your MERGE scripts will also

update and/or delete data. The MERGE will always include the section to insert missing rows.

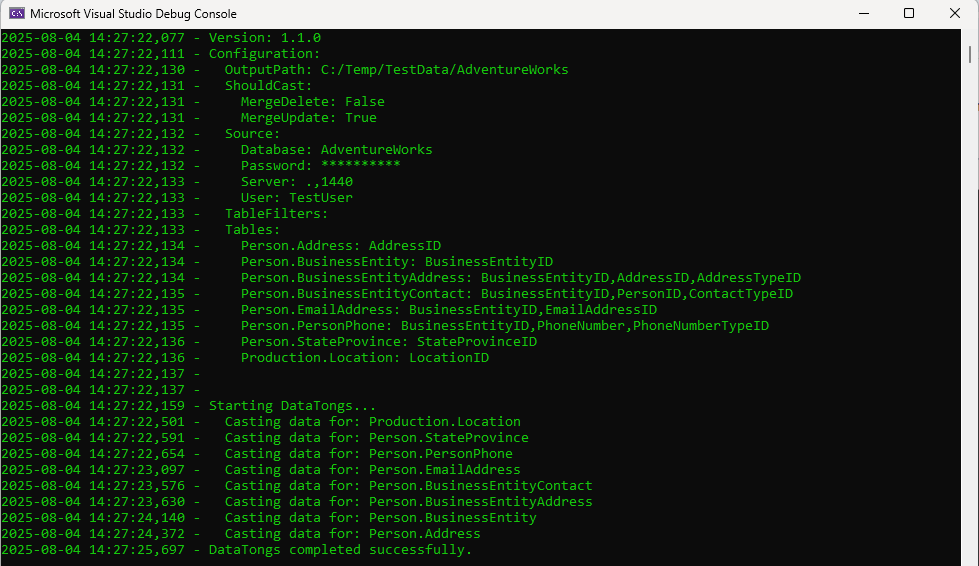

This is a console application, so it is recommended that you run from a command prompt. The need to create directories may require the command prompt to be opened in Administrator mode.

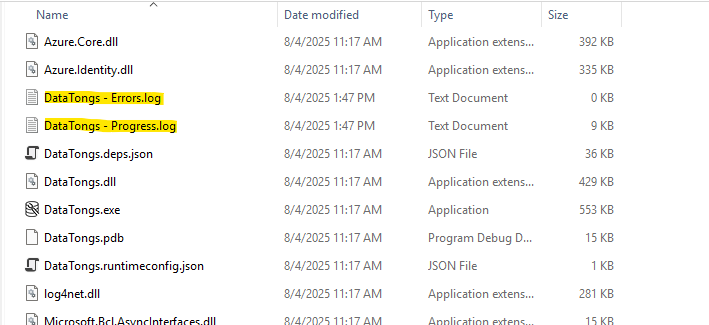

The tool will output progress to the console window and also generate log files for progress and an error log with details about any errors encountered. A successful run should look something like this with more detail depending on how many tables you configured for extraction.

The progress log will contain the same text as the console window and gets backed up to a numbered folder between runs, if possible, in case you need to refer back to a prior run. Error messages will go into the errors log. The files and backup folders are produced in the folder containing DataTongs.

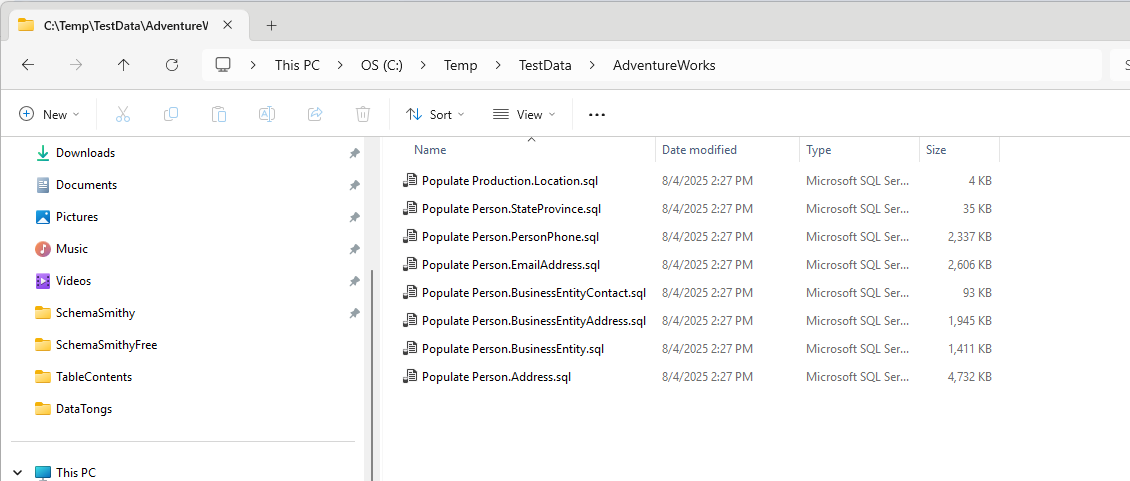

The tool will output scripts for the table(s) specified in the AppSettings.json to

the folder you specified in OutputPath like this:

An example merge script would look like this:

DECLARE @v_json NVARCHAR(MAX) = '[

{"Availability":0.00,"CostRate":0.0000,"LocationID":1,"ModifiedDate":"2008-04-30T00:00:00","Name":"Tool Crib"},

{"Availability":0.00,"CostRate":0.0000,"LocationID":2,"ModifiedDate":"2008-04-30T00:00:00","Name":"Sheet Metal Racks"},

{"Availability":0.00,"CostRate":0.0000,"LocationID":3,"ModifiedDate":"2008-04-30T00:00:00","Name":"Paint Shop"},

{"Availability":0.00,"CostRate":0.0000,"LocationID":4,"ModifiedDate":"2008-04-30T00:00:00","Name":"Paint Storage"},

{"Availability":0.00,"CostRate":0.0000,"LocationID":5,"ModifiedDate":"2008-04-30T00:00:00","Name":"Metal Storage"},

{"Availability":0.00,"CostRate":0.0000,"LocationID":6,"ModifiedDate":"2008-04-30T00:00:00","Name":"Miscellaneous Storage"},

{"Availability":0.00,"CostRate":0.0000,"LocationID":7,"ModifiedDate":"2008-04-30T00:00:00","Name":"Finished Goods Storage"},

{"Availability":96.00,"CostRate":22.5000,"LocationID":10,"ModifiedDate":"2008-04-30T00:00:00","Name":"Frame Forming"},

{"Availability":108.00,"CostRate":25.0000,"LocationID":20,"ModifiedDate":"2008-04-30T00:00:00","Name":"Frame Welding"},

{"Availability":120.00,"CostRate":14.5000,"LocationID":30,"ModifiedDate":"2008-04-30T00:00:00","Name":"Debur and Polish"},

{"Availability":120.00,"CostRate":15.7500,"LocationID":40,"ModifiedDate":"2008-04-30T00:00:00","Name":"Paint"},

{"Availability":80.00,"CostRate":18.0000,"LocationID":45,"ModifiedDate":"2008-04-30T00:00:00","Name":"Specialized Paint"},

{"Availability":120.00,"CostRate":12.2500,"LocationID":50,"ModifiedDate":"2008-04-30T00:00:00","Name":"Subassembly"},

{"Availability":120.00,"CostRate":12.2500,"LocationID":60,"ModifiedDate":"2008-04-30T00:00:00","Name":"Final Assembly"}

]';

SET IDENTITY_INSERT [Production].[Location] ON;

MERGE INTO [Production].[Location] AS Target

USING (

SELECT [Availability],[CostRate],[LocationID],[ModifiedDate],[Name]

FROM OPENJSON(@v_json)

WITH (

[Availability] DECIMAL(8, 2),

[CostRate] SMALLMONEY,

[LocationID] SMALLINT,

[ModifiedDate] DATETIME,

[Name] NAME

)

) AS Source

ON Source.[LocationID] = Target.[LocationID]

WHEN MATCHED AND (NOT (Target.[Availability] = Source.[Availability] OR (Target.[Availability] IS NULL AND Source.[Availability] IS NULL))

AND NOT (Target.[CostRate] = Source.[CostRate] OR (Target.[CostRate] IS NULL AND Source.[CostRate] IS NULL))

AND NOT (Target.[ModifiedDate] = Source.[ModifiedDate] OR (Target.[ModifiedDate] IS NULL AND Source.[ModifiedDate] IS NULL))

AND NOT (Target.[Name] = Source.[Name] OR (Target.[Name] IS NULL AND Source.[Name] IS NULL))) THEN

UPDATE SET

[Availability] = Source.[Availability],

[CostRate] = Source.[CostRate],

[ModifiedDate] = Source.[ModifiedDate],

[Name] = Source.[Name]

WHEN NOT MATCHED BY TARGET THEN

INSERT (

[Availability],

[CostRate],

[LocationID],

[ModifiedDate],

[Name]

) VALUES (

Source.[Availability],

Source.[CostRate],

Source.[LocationID],

Source.[ModifiedDate],

Source.[Name]

)

;

SET IDENTITY_INSERT [Production].[Location] OFF;

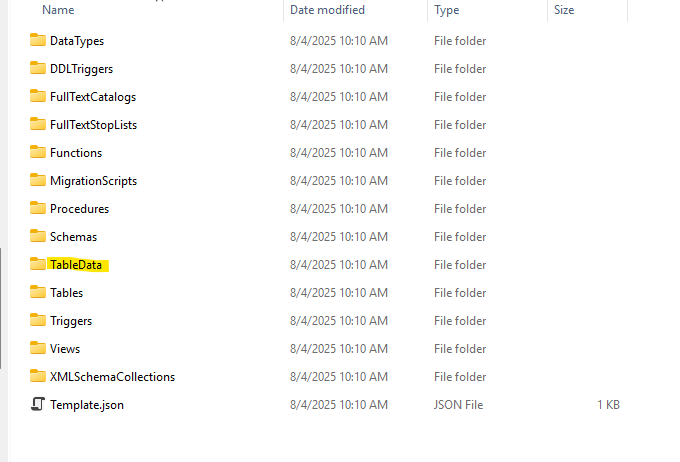

For any data that you want to include in a deployment, place the scripts into the TableData folder under the appropriate template in your product repository. SchemaQuench will automatically run those scripts every time and loop if needed to handle dependencies.

You can control execution order by renaming the scripts. This may be necessary to speed up the update and reduce the need for SchemaQuench to have to loop to handle dependency order issues.